Artificial intelligence is today’s talisman. It appears in every pitch deck, investor briefing and product brochure, promising a transformation that will outpace competitors and create outsized returns. Yet in the course of Technical Due Diligence, claims of being “AI-powered” often dissolve under scrutiny, revealing little more than experimentation or, in some cases, nothing beyond the marketing slide.

This is not new in technology markets. Every wave of innovation has carried with it a period of exaggeration before maturity. What makes the AI wave distinctive is the intensity of hype, the speed with which vendors feel compelled to attach AI to their proposition, and the genuine difficulty many non-specialists have in telling the difference between robust implementation and vapourware.

When “AI” Is Not Really AI

A common observation is that many companies describe themselves as using AI when their solutions are, at best, rules-based automation or statistical analysis. There is nothing wrong with a well-designed algorithm, but it is misleading to suggest machine learning or generative models where none exist. In our previous piece on When Every Product Claims to Be ‘AI-Powered’ we explored the risks for product leaders in allowing marketing to outpace reality. For diligence teams, the task is to strip away the branding and determine what the technology actually does.

Experimentation Without Execution

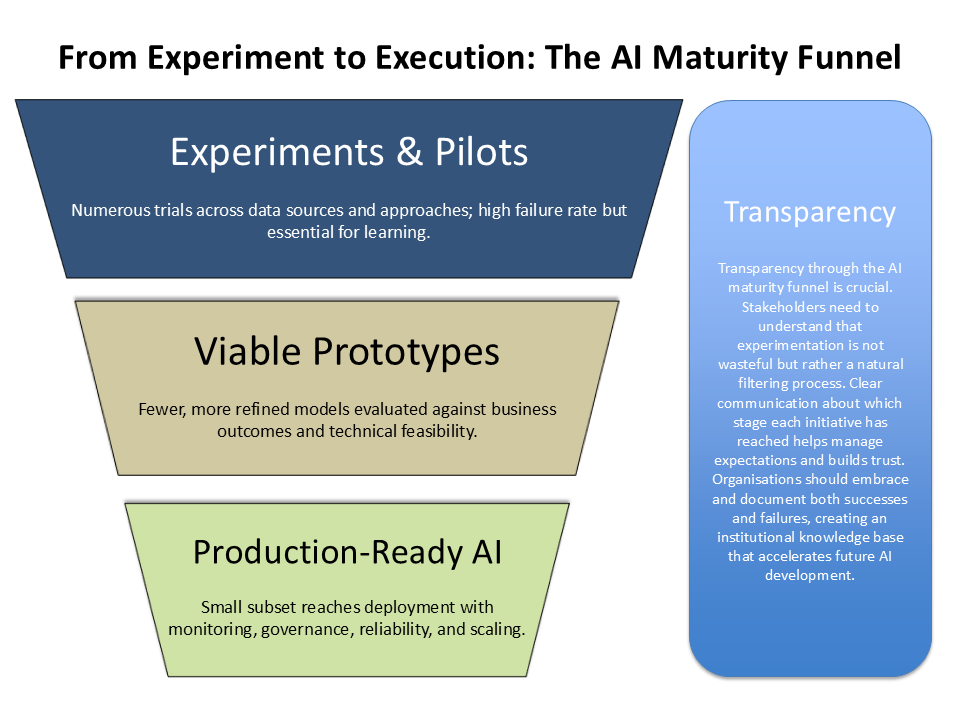

Another familiar pattern is the presence of early-stage pilots, proof-of-concept models or academic-style experiments, but little evidence of these being translated into production. A company might proudly present a research environment or lab demo while the live platform continues without any AI contribution at all. This disconnect is particularly significant in due diligence, since it raises questions not only about technological maturity but also about organisational capability to bring innovation into the core product.

Borrowed Brilliance

The growing ecosystem of pre-trained models and third-party services has created another layer of confusion. Many firms bolt on a commodity service from a hyperscaler or open model and present it as if it were proprietary. Once again, there is no inherent problem with leveraging external tools, but investors should be clear on the true intellectual property within a target company and whether differentiation really exists. As we discussed in What Does AI Readiness Look Like in Technical Due Diligence?, the ability to integrate responsibly and sustainably with external AI services is part of the readiness test.

Ethical and Regulatory Overstatements

Sometimes the claims are less about technology and more about compliance. A growing number of vendors insist that their AI is “fully compliant with upcoming regulations” or “bias-free by design”. Given that the UK and European regulatory frameworks are still developing, such assertions should trigger deeper questioning. It is essential to distinguish between a company that has put in place thoughtful governance measures – as we outlined in You Can’t Govern What You Don’t Understand – and one that has simply reassured the market without evidence.

Questions That Uncover the Reality

Spotting the AI mirage is not just about technical interrogation but about asking the right questions. Is there clear documentation of the models in use and the training data behind them? Is there evidence that pilots have been translated into production environments at scale? What proportion of the code base or service is genuinely proprietary, and what is repackaged from elsewhere? How are ethical risks, particularly bias and discrimination, being monitored in practice rather than in theory?

Why This Matters

For Private Equity investors and acquirers, overstatement is not merely an irritation but a material risk. A valuation that assumes AI-led growth will not hold if the technology is absent, immature or indistinguishable from competitors. Worse, overstated claims can conceal gaps in governance or engineering discipline that extend beyond AI. Just as we would identify outdated infrastructure or unmanaged technical debt, we must treat inflated AI positioning as another warning signal.

The task of due diligence is not to dismiss ambition but to understand what is real, what is near-term, and what remains speculative. In doing so, investors are better placed to value a business correctly and management teams can receive the support they need to turn early experiments into lasting impact.